💡 Defence in Depth

“Defence in depth”, sometime also called “layering” is a central concept in information security. It relates to the idea that security components should be designed so they provide redundancy in the event one of them was to fail.

As a bit of history, it is believed the concept of defence in depth has been first introduced by the roman army as the Roman empire reached the end of its expansion and entered a more stable period. During the expansion period, Roman territorial borders were heavily guarded and enemy forces, even in small groups, were immediately attacked and repealed. It was a very aggressive strategy which resulted in a quick expansion, as each attack allowed to gain a little more ground into enemy territory, but it required a lot of troops to maintain.

As the empire settled, the approach had to change and became a more defensive approach, requiring less soldiers. The new strategy focused on slowing down attackers long enough to let the army - now regrouped and located slightly further from the border - gather and come to intercept them. To do so, Romans put in place various type of defences one after the other, for example spikes, followed by a trench, followed by a wall. Each defence layer could fairly easily be defeated independently but the skills and tools needed to do so were very different for each of them. So the invaders will first have to send men to remove the spikes, then get planks to cross trenches, then get ladders to pass the walls. Even if they became very efficient at eliminating one of the layers, the others would still be there to slow them down. And by then, the few guards posted around will have time to alert the nearby fort and get reinforcement.

In computing, the concept of defence in depth is very similar. It works on the premise that each layer of defence can be penetrated, but the effort to penetrate them all is so substantive that either the attacker will not pursue, or detection will occur prior all layers are passed.

The Swiss cheese model

The defence in depth approach derives from a risk concept known as the cumulative act effect – more often referred as the “Swiss cheese model”.In the Swiss cheese model, security systems are compared to multiple slices of Swiss cheese, stacked side by side. The characteristic holes in each Swiss cheese slice represents a weakness or vulnerability. Because the slices are stacked behind each other ("in series"), the chances of having a hole traversing the stack from one side to the other is greatly reduced. From a risk perspective, it means that the existence of a single point of failure is reduced as even if one layer gets compromised or bypassed, the next one will hopefully catch the threat.

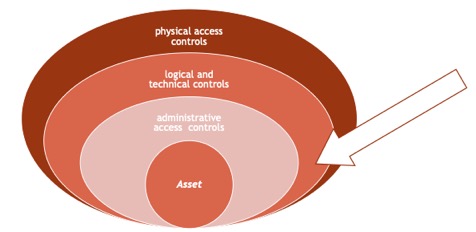

Applied to information security, the defence in depth approach put the asset at the centre of the security design, with various layers of protection applied one after the other around it. The more common pattern consists in at least 3 layers:

- physical access controls, that will physically limit or prevent access to the IT systems (fences, guards, CCTV...)

- logical and technical controls, that will protect systems and resources (encryption, IPS, antivirus...) and

- administrative access controls, that will provide guidance on how to handle systems and data (policies and procedures such as data handling or secure coding practices).

Each of these layers can in turn by divided into even more layers, creating an onion-type design where each security capability works independently from the others. This is an important point: because the layered approach is defined “in series”, each capability must be fully isolated from the other layers so, regardless if any other layer fails, all the other continue to offer optimal protection.

The basic rule of thumb is that:

- each layer must have at multiple separate active protections – for example the physical layer could have a fence and a CCTV – and

- each protection must not work for multiple layers – for example, the same camera must not be used for both CCTV in the physical layer and facial recognition in the technical layer.

Applying Defence in Depth in the Cloud

While the concept of defence in depth has been around for decades, its adoption started really in the early 2000’s, as IT environments became highly networked. Prior to that, security was mostly reliant on a peripheral approach, where the network borders where heavily protected, but limited to no protection existed once inside the network.As environments started to face the Internet and new threats emerges, the defence in depth introduced great strength, especially for internally hosted systems. For organisations with large IT infrastructure hosted in data centre, it was a robust model that allowed them to go beyond the basic permitter approach. The logical and technical controls became more and more central to the security strategy, with its own sub-layering:

- Network protections, such as firewalls, IPS, DMZ;

- Application protections, such as antivirus, sandboxing, vulnerability scanning;

- Access protections, such as strong password policies, MFA; and

- Data protections, such as encryption, DLP…

The emergence of Cloud-based solutions changed things once again. In cloud environments, defence in depth takes a very different form as some of the protection layers are now controlled by an external party. Strong cloud providers have very secure operations and capabilities, but their offer is defined to satisfy a large range of customers which means that, by default, some of the protection organisations would have put in place to answer their specific needs might be absent.

A good example is the way AWS S3 buckets used to work in their early days. AWS S3 buckets are storage capabilities offered as a service. They were created as a very easy means to store web assets for website. The provider (AWS) manages the infrastructure and OS supporting them, and the customer can simply put data in them, while website visitors can access the assets and display them. The bit that AWS manages is extremely well secured, better than most organisation would be able to do. But because of their intended purpose, they used to be by default open to everyone for read access. This became an issue as organisation started to use these buckets to store sensitive data but did not made the effort to protect them adequately, changing the default settings of the service. This resulted in a number of public data breaches a few years ago.

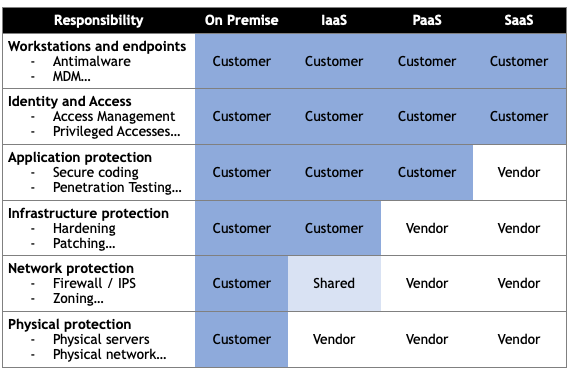

Once you are familiar with the cloud shared responsibility model, it becomes evident that defence in depth still heavily applies to cloud environments. However, since it is now a shared model and not a single model managed centrally, 2 new critical elements that need to be considered:

- Who is responsible for each layer of security? The answer to that question will be different depending on the Cloud model used. The table below will give you an idea of what to expect in term of responsibilities:

- What is the intent of the provider and does that intend fit my needs? Services offered in the cloud have specific intend and expected usages that might not be aligned to what you are trying to achieve. This also means that the level of security applied will be adequate for that specific intend only and not necessarily enough for your specific use-case. For example, if a service is designed for public distribution of data over large number of consumers, its security capabilities might not be adequate for storing data heavily sensitive and restricted.

Applications

Looking at some of the main public security breaches in the last few years, it is quite obvious that some level of defence in depth could have potentially prevented or reduce number of them. A large number of breaches in the last few years have been caused by crypto-lockers, where the hacker introduced a malware that encrypt the victim data, and a ransom is requested to release the key that will allow the data to be decrypted.If we look at the intrusion method, these attacks are usually perpetrated using 2 possible patterns:

- Attackers trick the victim into installing the malware (by sending it as a fake email attachment, hiding it on a website or sending a link that will trigger its deployment). In this scenario, we can see already 2 layers failing: the user did not recognise the risk and the anti-malware solution (if any) did not stop it. But a very basic 3rd layer could be in place to stop the threat: backup. If the data are backup regularly, then they could be retrieved even if the attack succeeded.

- Another pattern is the attacker using a phishing email to trick the user into entering their credentials on a fake website. The attacker now has valid credentials allowing them to get into systems exposed to the internet and deploys the crypto-locker. In this scenario again, the human layer failed, and then the identity layer got compromised. Ensuring at a network level that only trusted (registered) devices can connect to a system or limiting access to device coming from a specific subnet (VPN for example) are additional controls that could be used to limit the risk of failures.

If you are in the early stages of your security journey, it is pretty evident that all these layers take times to be implemented and it is pretty unrealistic to expect all to be in place at once. But building a plan to build them up over time can help.

- Consider starting with your perimeter and external network layers. Protect everything in direct connection to the internet, deploying firewalls, antivirus, IPS… and making sure they are adequately monitored.

- Continue with your users, as they are the most unpredictable and most likely to fail you. You need to ensure strong authentication (with MFA) and user training are in place.

- Then ensure your infrastructure layer is robust, with encryption, internal network segregation, regular patching and vulnerability detection capabilities

- And then move toward more advance practices at the application layer, with application sandboxing, good coding practices, DLP…