💡Agentic AI's Identity Crisis

Key Points: Agentic AI's Identity Crisis

- Agentic AI creates new identity risks: autonomous agents act like humans but lack traditional identity controls.

- Weak authentication and access controls leave agents vulnerable to misuse and cascade attacks.

- Poor governance and lifecycle management cause “identity sprawl” and orphaned, unmonitored agents.

- Accountability gaps make it hard to trace actions or assign responsibility.

- Stronger identity frameworks, such as unique credentials, least privilege, audits, and human oversight, are essential for safe AI adoption.

Agentic AI – autonomous AI “agents” that can perceive, decide, and act with minimal human input – is rapidly entering business workflows. These AI agents might schedule meetings, manage systems, or even execute transactions on our behalf. For security leaders, this trend feels like déjà vu with a twist. Much as with the rise of cloud or DevOps, identity is emerging as the crux of the challenge. Except this time the stakes and complexity are unprecedented. In fact, industry estimates suggest most enterprises experimenting with AI agents have not extended their identity and authentication controls to these non-human actors, creating an authentication concern without precedent. If autonomous agents are deployed without robust identity safeguards, we risk a sprawl of ungoverned digital personas operating at machine speed, a perfect recipe for security incidents.

Agentic AI and “Non-Human” Identities

Why is identity such a focal point? Traditionally, security programs revolved around human identities: employees and contractors in a central directory, with defined roles, periodic access reviews, and predictable off-boarding. Machine identities (like service accounts and APIs) later added volume and complexity, but we adapted our governance to manage them. Agentic AI breaks this model entirely. These AI agents represent a new class of identity: they behave with human-like intent and decision-making, yet operate with the relentless speed and scale of software. They’re decentralised by default (spun up wherever needed), easy to create (a single developer or even another AI can instantiate one in minutes), and able to act across multiple systems without direct human oversight. From an identity perspective, this is a very volatile mix. Agents authenticate to services, authorise actions, and generate downstream events much like users do, but they don’t fit neatly into our existing identity & access management (IAM) frameworks.Crucially, unlike a human user, an AI agent isn’t tied to a personal accountability by default. It’s a non-human identity (NHI), essentially a digital actor not traceable to a single flesh-and-blood person. Enterprises may suddenly find themselves needing to manage not just employee accounts but a multitude of autonomous agent identities working 24/7. According to Gartner, agentic AI is on track to become ubiquitous (they predict one-third of enterprise applications will include agentic AI by 2028, up from under 1% in 2024). This means now is the time to get ahead of how these AI identities are created, controlled, and audited.

In this article, we’ll explore the key identity-centric challenges posed by agentic AI through a cybersecurity and risk lens, including authentication, access control, identity governance, and accountability. We’ll also share practical recommendations for technology leaders and CISOs to address these issues before they undermine our security and compliance posture.

Identity Challenges with Autonomous AI Agents

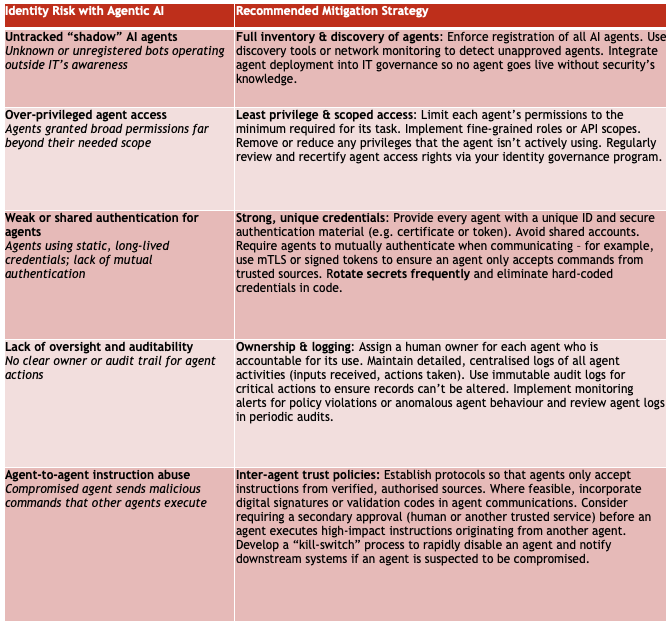

1. Authentication & Access Control Gaps

Agentic AI introduces fundamental questions about authentication, both for the agents themselves and the commands they execute. Many organisations today allow AI agents to operate using generic or inherited credentials (for example, an agent might run under a developer’s account or use a static API token shared across agents). This is dangerous. If an AI agent’s credentials aren’t unique and strongly managed, it becomes easy prey for attackers. In fact, one survey found that 23% of IT professionals reported that their AI agents had been tricked into revealing access credentials, and 80% of companies saw bots take “unintended actions”, often because authentication and command validation were too weak.A related issue is agent-to-agent communication. For these systems to accomplish complex tasks, one agent often must invoke or pass instructions to others. Without a way to verify identities and trust between agents, a rogue or hijacked agent can send malicious instructions that perfectly legitimate agents will follow. Traditional authentication controls struggle here: certificates and tokens can establish an agent’s identity, but how does an agent decide whether another agent’s request is appropriate? Today’s security mechanisms rarely account for an AI agent evaluating the legitimacy of a peer’s command. This opens the door to novel attacks: for example, a malicious actor could compromise one agent and then impersonate it to issue rogue commands to other connected agents, effectively chaining compromises. By the time a breach is detected and the first agent’s credentials are revoked, several others may have already executed the bad instructions in good faith. This “cascade” effect is an authentication and access control nightmare that has no direct precedent in human user workflows. It demands new thinking about continuous verification of actions, not just at login, but at each step of an agent’s autonomous process.

Moreover, AI agents often require broad access to fulfil their missions – they might need to read emails, query databases, or control IoT devices. Granting such wide privileges “just to make it work” is a common shortcut when deploying agents under time pressure. Unfortunately, over-privileged agents violate the principle of least privilege and expand the attack surface dramatically. If one gets compromised, the attacker can potentially do far more damage than if that agent had tightly scoped permissions. Yet over 95% of enterprises using agentic AI haven’t integrated these agents into their usual identity protections like Public Key Infrastructure or privileged access management. In short, authentication and authorisation controls for agents are lagging far behind the enthusiasm for deploying them.

2. Identity Governance & Lifecycle Management Challenges

In well-run IAM programs, every human account is created through a defined process, given appropriate access, monitored, and eventually deactivated or removed when no longer needed. With autonomous agents, such governance processes are often missing. AI agents can be spun up by end-users or lines of business as easily as installing a new app, effectively a form of Shadow IT where an unsanctioned bot now has access to corporate systems. Visibility is the first issue: security teams may not even know an agent exists, let alone what resources it’s touching. Compounding this, agents are dynamic. They might be created ad-hoc for a particular project or task and then forgotten. Far too few organisations assign clear ownership for AI agents or track their lifecycle state. As a result, it’s common to find orphaned agents still running with valid credentials long after the employee or team that launched them has moved on. Each orphaned or unmonitored agent is an identity ticking time-bomb, an account that attackers can target, and one that won’t be caught by standard off-boarding routines.Scale is another governance headache. With potentially hundreds of AI agents operating, the “identity sprawl” can outpace what current identity governance tools handle. Traditional Identity Governance and Administration (IGA) systems were built to manage employees and some service accounts in a central directory. But AI agents might not live in one directory at all – they can exist across cloud platforms, local machines, SaaS tools, etc.. They may spawn other sub-agents or use integration accounts of their own. This decentralisation makes it tough to catalogue and control agent identities through one pane of glass. We risk an environment where the number of non-human identities far exceeds human ones, with many falling outside regular governance. In fact, organisations are now having to credential not just employees and partners but a multitude of non-human identities operating in previously trusted zones, multiplying the attack surface and outstripping security oversight.

Lifecycle management needs rethinking for agents. These bots might have short life spans, spun up via code pipelines and meant to be ephemeral. This calls for fully automated provisioning and de-provisioning. If our processes for granting and revoking access are too slow or manual, they simply won’t be applied consistently to fast-moving AI agents. Indeed, many current CI/CD pipelines deploy agents with hardcoded credentials or API keys that never expire. Without deliberate effort, privileges granted “temporarily” can become permanent. The lack of expiration, regular attestation, or re-certification for agent accounts is a stark governance gap. Over time, agents accumulate privileges and no one revisits whether those access rights are still justified, a scenario that mirrors the worst identity hygiene problems we’ve seen with human users, now happening at machine speed.

3. Accountability and Auditability

When an autonomous AI makes a decision or performs an action, who is accountable for the outcome? This is both an ethical question and a technical one. From a security standpoint, accountability ties back to being able to attribute actions to an identity and to have an audit trail. With human users, we strive for non-repudiation: users authenticate and their actions are logged, so we can later say “Alice changed this setting at 3 PM” and trust that record. With AI agents, achieving non-repudiation is challenging. Many agents operate using service accounts or tokens that don’t easily map to a human operator. An agent might execute a series of transactions; if something goes wrong (say it deleted the wrong data or made an unauthorised wire transfer), investigators need to know which agent did it, under whose direction, and was it following a legitimate policy or did it go rogue. Today, many organisations lack the logging and monitoring to answer these questions.A major concern is lack of traceable ownership. If an AI agent is tied to a person or team “owner” in the identity store, there is at least someone accountable for its behaviour. However, when agents proliferate informally, you often find logs showing actions by “automation_bot_123” with no clue who set it up or why. This opacity undermines both security and compliance. It’s easy for malicious activity to hide in the noise of agent operations if no one is reviewing their logs. And if a breach occurs through an agent, it can be painfully difficult to piece together the chain of events: agents may not record every decision or might lack sufficient logging by default. The “black box” decision-making of AI (especially if powered by complex machine learning models) further complicates root-cause analysis. We might see the end result (e.g. a file was moved or an email sent), but not have insight into why the agent did so or on what basis it decided it was allowable.

This also raises the scenario of plausible deniability or confusion in accountability. Imagine an AI agent acting on behalf of a manager approves a fraudulent transaction: the manager might claim “the AI did it, not me,” while the AI can’t exactly be “disciplined” like a human. Organisations must anticipate this gap. From a risk and compliance perspective, we need to enforce that deploying an AI agent does not absolve the human owners or the organisation from responsibility. If anything, it increases the need for oversight. Yet enforcing accountability requires both policy (clearly assigning responsibility for agent actions) and technical capability (ensuring comprehensive, tamper-proof logs and audit trails for every agent action).

Finally, consider incident response in an agentic environment. When a human account is compromised, we reset credentials, terminate sessions, and perhaps roll back their changes. With a web of AI agents, if one goes rogue or is hijacked, simply disabling that agent’s identity may not be enough – because other agents might have acted on its prior instructions already, as discussed earlier. Tracing and undoing those downstream actions is an accountability nightmare. Some experts liken it to trying to recall an email after it’s been forwarded around, except here the “forwarding” happens instantaneously between machines. It’s a tall order to design a system that can roll back or alert on all actions initiated by a compromised agent. While this challenge is still largely unsolved in practice, it underscores how much more data and coordination we’ll need in our audit logs and incident playbooks. Without richer accountability measures, agentic AI could create opaque corners of the enterprise where problems fester unseen.

Strengthening Identity Security for Agentic AI

Despite these challenges, we can address the risks of agentic AI by adapting the identity and security disciplines we already know. The goal of good security is not to halt innovation, but to guide it safely, ensuring that as organisations embrace autonomous agents, doing so with eyes wide open and proper controls in place. Here are practical steps and considerations for technology leaders and security teams:Treat AI Agents as First-Class Identities: Include autonomous agents in your Identity and Access Management (IAM) and Zero Trust strategies from day one. That means every agent should have a unique, trackable digital identity, not a shared account or an anonymous token. Integrate agent identities into your central identity provider or directory services where possible, just as you would a new employee or service account. This lays the groundwork for enforcing policies on agents consistently. Don’t allow “ghost” agents to roam free.

Establish Clear Ownership and Accountability: Require that every AI agent is tied to a responsible owner (an individual or a product team) in your records. This creates a chain of accountability. If an agent behaves unexpectedly, you know whom to contact and who must take corrective action. Make it policy that deploying an agent includes registering it in an internal inventory with details like purpose, owner, and scope of allowed actions. Much like any critical asset, if an agent has no owner, it shouldn’t be running. Consider tagging agent accounts with metadata indicating their creator or sponsor. This clarity will also help during audits and when demonstrating control over AI usage to regulators or clients.

Enforce Least Privilege Access: Apply stringent role-based or attribute-based access controls (RBAC/ABAC) for AI agents just as (or even more than) you do for users. Avoid the temptation to give an agent “god-mode” access to all systems. Instead, grant permissions incrementally, aligned to the agent’s specific tasks. If an agent is meant to generate marketing reports, for example, it probably doesn’t need access to financial transaction systems. Use fine-grained scopes for API tokens and separate accounts for different functions. Also implement time-bound access where feasible: let agents access sensitive resources only during the timeframe of a task or a campaign. By containing what an agent can do, you dramatically reduce the blast radius if it goes haywire. Regularly review agent access rights (e.g. through quarterly access recertifications) to remove privilege creep: this is where your identity governance tools should evolve to handle agents in their campaigns.

Institute Robust Authentication (for and between Agents): Strengthen how agents authenticate to systems and to each other. Ideally, issue unique credentials or cryptographic certificates for each agent identity. Modern approaches could include using mutual TLS (mTLS) or signed tokens that allow agents to prove their identity to one another. Leverage your Public Key Infrastructure: just as employees might use certificate-based authentication for VPNs, agents can use certificates to sign their requests, so that receiving systems verify “Yes, this is a known trusted agent.” Avoid static passwords or API keys that never change: they will get leaked or cracked. Implement automated credential rotation for agent accounts and short-lived tokens (OAuth 2.0 with short expiry and refresh, for example). Furthermore, treat unknown agents as untrusted by default: if Agent A receives a command or data from an agent it doesn’t recognise, it should either reject it or sandbox/verify it. Some emerging proposals like Anthropic’s Model Context Protocol (MCP) aim to create standards for agents to exchange identity and context safely. Until such standards mature, enterprises may need to implement their own allow-lists or validation checks for inter-agent interactions (for instance, only agents that have been granted a specific signed token by your central system can talk to your internal agent network).

Continuous Monitoring and Behaviour Analytics: Given the speed of agentic operations, real-time monitoring is essential. Deploy anomaly detection for agent behaviour, just as many do for user accounts. If an AI agent that usually queries database records suddenly tries to delete files or access an unrelated system, trigger an alert or automated containment. Build baseline profiles for each agent’s normal activities (volume of transactions, typical accessed resources, time of operation, etc.) and use AI itself to flag deviations. Importantly, centralise logs from all agent actions across your environment. Ensure logging is comprehensive and tamper-resistant: every important decision or action an agent takes should be recorded with timestamps and agent IDs. Where possible, include contextual data in logs (e.g. “Agent X acted because it received instruction Y from source Z” ). This level of telemetry not only helps detect misuse, but also is invaluable for forensics and accountability. Consider implementing immutable audit logs (write-once logs) for critical agent transactions to prevent an advanced agent (or attacker) from covering its tracks.

Lifecycle Management & “Off-boarding” for Agents: Just as we onboard and off-board employees, do the same for AI agents. Incorporate agent identities into your identity lifecycle management processes. For example, when a project that spawned certain agents is completed, ensure those agents’ access is promptly removed (or the agents are deactivated entirely). Use automation to your advantage: set expiration dates on agent accounts by default, requiring an owner to intentionally extend if the agent is still needed. Regularly sweep for inactive or orphaned agents: if an agent hasn’t checked in or acted in a while, that’s a candidate to disable and eventually delete. Linking agents to human owners, as mentioned, means if an employee leaves the company, you can review any agents they owned and determine if those should be handed over or turned off. This lifecycle discipline prevents “agent sprawl” from accumulating indefinitely. In effect, treat agents with the same rigour you treat temporary contractors: no open-ended access.

Implement Guardrails and Human Oversight: Not all decisions should be left entirely to machines. Identify high-risk actions in your AI agents’ repertoire and require a human check or approval (a “human in the loop” ) for those cases. For instance, an agent could prepare a payments list but a human must approve it before funds transfer, or an agent can draft an email but not send to all customers without review. These oversight points reduce the chance of catastrophic mistakes and also insert accountability: a human signs off on what the agent prepared. Additionally, program preventive into agents: e.g., an agent should refuse or at least pause on instructions that seem to conflict with policy (such as an order to exfiltrate data or delete backups). In practice, this might mean baking in ethical or safety checks into the agent’s prompting logic, or using constraint frameworks that limit what an agent can do without higher-level confirmation. Granted, today’s AI sometimes ignores provided guardrails, but having them is still necessary. Finally, ensure your incident response plan covers AI agents. Prepare a “kill switch” mechanism: a way to rapidly freeze or quarantine an agent across all systems if it’s behaving maliciously. This could be as simple as a script that revokes the agent’s credentials and notifies all systems it interacted with to invalidate pending tasks. Test these emergency procedures in exercises, just as you would for a compromised admin account scenario.

Invest in Identity Innovation and Collaboration: The security community is actively working on this frontier. Keep an eye on emerging solutions such as agent identity management tools, some of which are beginning to appear (start-ups and established IAM vendors are developing offerings to manage AI agents’ credentials and policies). Standards bodies are also moving: for example, the OpenID Foundation released a white-paper in late 2025 on AI agent identity challenges, and OWASP’s GenAI Security Project is cataloguing top risks (with mismanaged agent identity and access among the top concerns). Engage with industry groups, share your own lessons, and consider contributing to setting standards around agentic identity. Internally, foster collaboration between your IAM team, AI/ML teams, and software developers. Security architects should work closely with those building or deploying AI agents to bake in identity controls from the start (much like DevSecOps). By proactively doubling down on the “identity-first” security model, extending it to cover all non-human actors, you not only protect your organisation but also help shape best practices in this nascent space.

Looking Forward – Identity as the Backbone of Secure AI Adoption

Implementing the measures above will require effort and possibly new tooling, but it is increasingly non-negotiable. As autonomous AI capabilities mature, identity truly becomes the anchor of trust. We need to know at all times who (or what) is executing actions in our digital environment, and be confident that they are authorised and monitored. The organisations that handle this well will be those that can embrace agentic AI with confidence rather than fear. They’ll unlock the productivity and efficiency benefits of AI “co-workers” while keeping risks in check.On the other hand, those who ignore the identity dimension do so at their peril. An unmanaged swarm of AI agents can lead to breaches, compliance failures, and chaos that far outweigh any short-term gains. We may even see executive and public backlash in cases where an agent gone rogue causes harm and no one can explain or control it. As CISOs and tech leaders, it’s our job to prevent that outcome by putting guardrails in place now.

A disciplined identity-centric approach, incorporating strong authentication, stringent access control, continuous governance, and clear accountability, can tame the risks of even this novel technology. It’s reassuring that we don’t need entirely new governance magic; we need to adapt known IAM best practices to a new context. Responding to that shift means evolving our security mindset: expanding our identity and risk frameworks to cover non-human actors with the same vigilance as human ones.

In Short: Securing Agentic AI Through Identity Governance

Agentic AI promises incredible efficiencies: imagine AI assistants handling mundane tasks or complex analytics at lightning speed. But to realise these benefits safely, organisations must address the identity challenge head-on. That means answering questions like: How do we know who an AI agent really is? What it should be allowed to do? Who is responsible for its actions? By implementing the strategies outlined, from unique digital IDs for agents and least-privilege access to rigorous monitoring and lifecycle controls, we can begin to confidently say “yes” to agentic AI, but on our terms. In a world where AI agents may soon number as many as human users in our systems, getting identity right is the key to keeping autonomy from turning into anarchy.As we move forward, security leaders should champion the idea that securing agentic AI is first and foremost an identity problem, and we have to solve it together. By doing so, we ensure that autonomous AI becomes a powerful ally in our organisations, not a source of new chaos. In the end, those who succeed will be the ones who recognised early that robust identity governance for AI agents is what enables innovation to scale safely. Let’s take the lead in making that happen, so we can embrace the future of AI with trust and accountability built in from the start.

Disclaimer: This article is not legal or regulatory advice. You should seek independent advice on your legal and regulatory obligations. The views and opinions expressed in this article are solely those of the author. These views and opinions do not necessarily represent those of AMP or its staff. Artificial Intelligence Technology was used to proof-read this article.